When I was a Kid Hi-Fi Crazy many years ago, "specs" were really important to me and to all of my Kid Hi-Fi Crazy friends. Being kids, we naturally had no money, and couldn't afford to buy anything—or at least not anything that wasn't either a kit or second-hand. Even so, we were all very much into at least being aware of the newest and finest of everything the audio industry had to offer.

That's why we all became literature collectors and, whether from manufacturers' product "handouts" or from magazine reviews, and made it a point to learn as much as we could about the state of the audio art.

We all knew—sometimes to five decimal places—the frequency response, the total harmonic distortion (THD), the intermodulation distortion (IM), the signal-to-noise ratio (S/N), the slew rate and slew induced distortion (SID), the (possibly–related) transient intermodulation distortion (TIM) [HERE], and just about every other significant or imagined-to-be-significant specification for just about every significant or imagined-to-be-significant hi-fi product then on the market. And we were, as a great many modern audiophiles still seem to be, certain that doing so was somehow important.

Gathering all those bits of information was easy at the time: Just about every manufacturer, of just about everything in audio, published "spec sheets" and, with no subjective (What does it sound like?) reviewing available until years later, when J. Gordon Holt came along, the magazine reviews of the time featured little else. (I have heard, but NOT personally verified that the word "sound" was never once used by Julian Hirsch—for decades the world's leading hi-fi reviewer—in any one of his more than 4000 tests. (For more, see HERE])

My first clue that specs might not be as vitally important as we had all imagined came from a truly unexpected source: In my late teens, when, by working after school, I started to have a little money to spend on my (first hi-fi, then, later, stereo) system, I heard that RCA's Broadcast Audio Division had a two-way coaxial speaker, the LC-1a, that I thought I might want to own. (These were, I have been told, the same speakers as were originally installed around that time in the world-famous Cinerama Dome Theater in Hollywood.) With RCA having an office relatively near me, I called to ask for a spec sheet; was put on to one of their engineers; and asked for what I wanted. He told me that he would send it to me (and, in fact, wound up sending me the entire RCA Broadcast Audio catalog). Then, because I guess I must have sounded as young as I was, he asked me if I knew how to read speaker specs, including not just the numbers, but frequency response curves and polar pattern diagrams. When I told him that I did, he surprised me with something to the effect of, "Good, then you'll understand that these speakers sound a whole lot better than they look on paper."

The next clue, albeit in the opposite direction, came later, in the 1970s and 80s, when, using massive amounts of negative feedback, Japanese mid-fi manufacturers started producing moderately-priced audio electronics that had published total distortion (THD) claims of less than .001%. This was very much lower than that of even the most expensive and best sounding hi-fi gear to that time, and promised great things. There was only one problem—the sound sucked. Even though spectacular frequency response extension and linearity were claimed along with its near-zero claimed distortion, it was no joy to listen to. The perceived reality was far different from the claims, and we could only wonder if the tests were false, or if they were true but that the wrong things were being tested, or if they were both true and of the right thing, but that the results were somehow being presented in a misleading manner.

That kind of presentation is certainly to be found, and its most common example is the "frequency response curve" often packed with High-End phono cartridges. These long, narrow strips of paper purport to show the tested performance of the individual phono cartridge that they are packed with. That would be both helpful and informative if it were meaningful, but, unfortunately, it isn't. Instead, the "curves" are purposely deceptive. By showing a range of (typically) 10Hz to (typically) 35 or 40kHz on the horizontal axis, in (typically) 10 to 20dB increments on the vertical axis, the cartridge manufacturers produce a near ruler-flat frequency response "curve" that, while completely true as presented, totally conceals any frequency response variations that might be present, including the rising high-frequency response typical of moving coil cartridges.

It may be that things like that are why, over the years, a majority of reputable audio manufacturers have stopped releasing any performance specifications at all.

Another reason may be that, from a practical standpoint, a great deal of audio testing is of little or no value to the manufacturers' audiophile customers. How much does it really matter, for example, if a component has .001% or .002% THD? Or if its frequency response extends to 30 kHz or 50k Hz? Will a sensitivity difference of even 100% (3dB) change your mind about buying a pair of speakers?

And some testing can actually work against the finding of truth.

Just for example, consider this: A pair of speakers that measures perfectly "flat" in an anechoic chamber can still sound disappointing in actual home use. In addition to the obvious effects of room acoustics on perceived frequency response in the middle and upper frequencies, how the speakers are placed in the room relative to walls, floor, and corners will absolutely affect their audible bass response. Close proximity to each of those surfaces will add to the speaker's bass amplitude (not frequency extension), and the effect will be cumulative with each additional surface added, and clearly audible.

Absolute bass frequency response is another sonic area where testing can be both irrelevant and misleading. Even despite measured proof that a speaker is capable of response down to 20 Hz or even lower, if a room is smaller than one half the wave length of the frequency to be reproduced (for a 20Hz tone, that would be about 27 feet [8.23 meters]), that frequency simply cannot be propagated in it, and to buy speakers that go that deep for that room would simply be a waste of money.

Perhaps even more obvious is the effect of speaker placement on imaging and soundstaging. As anyone who has ever set up a pair of speakers knows, even speakers designed and measured to be perfectly time-coherent (planars, for example) still need to be properly placed in order to make a coherent image. Just the fact that their specifications indicate that they ought to be able to do so makes no difference at all in the real world.

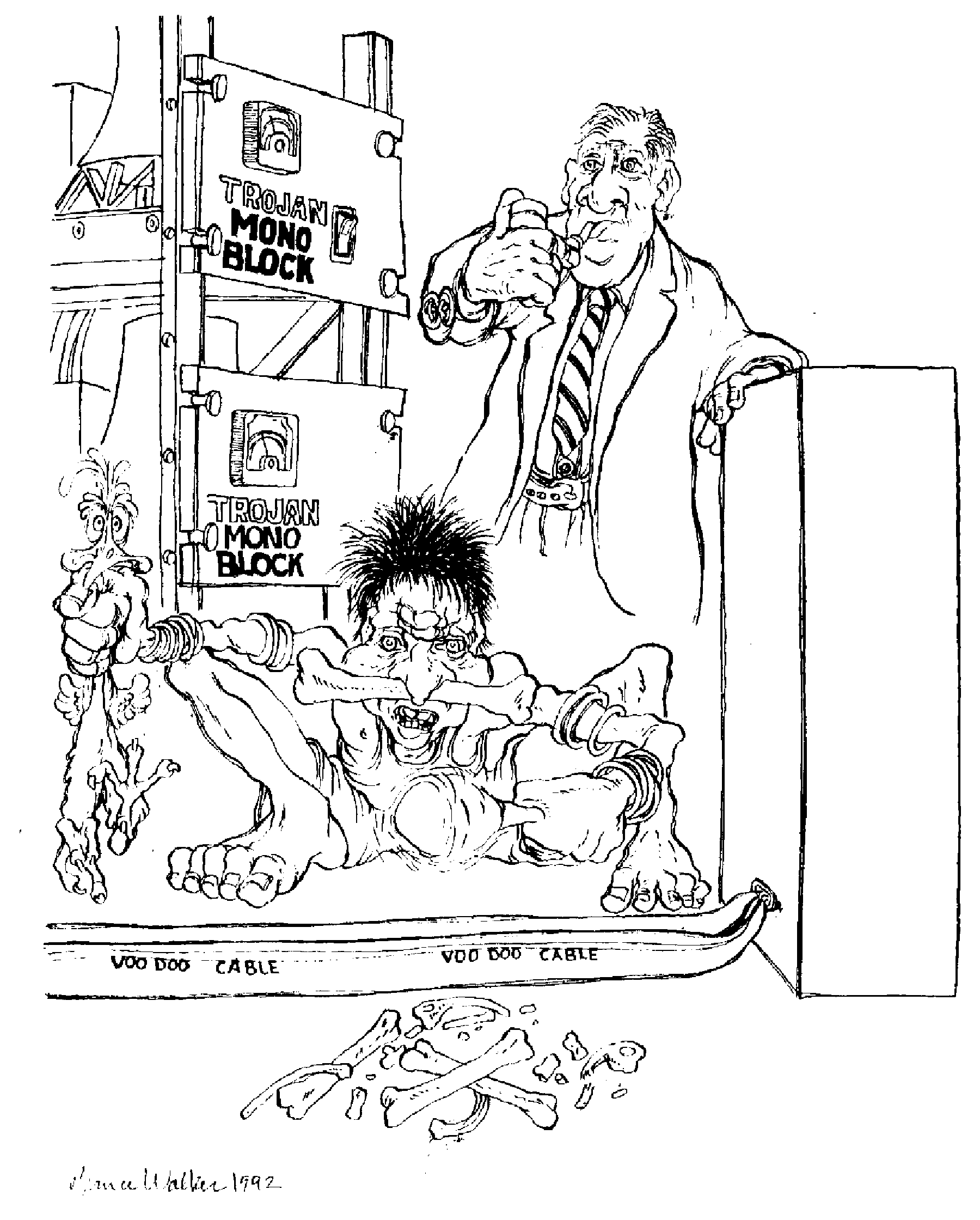

Other examples of the unreliability of testing to determine real sonic differences abound: Real and audible signal losses due to capacitive discharge effects in cables (remember that a cable not only has capacitance, but is, literally, a capacitor) can't be seen on an oscilloscope. As cancellation losses at the "zero" line, they're visible in a music signal only as reductions in peak-to-peak signal height, and are virtually impossible to determine.

Another kind of testing that's loved by audio objectivists is the "double-blind" test, where neither the tester nor the testee knows which of two or more things is being played. Although for many other kinds of testing, it's correctly praised as "the gold standard of test procedures," double-blind testing can only work where the presence, absence, or degree of one single characteristic is to be determined—the amplitude of one 1 kHz test tone relative to another, for example. Music NEVER presents only a single characteristic, so double-blind testing using a music signal simply doesn't apply, and testing of a signal that has only one characteristic may be of little audiophile interest.

That's not to say that testing is of no value. Manufacturers test their products all the time, to determine performance during the design process or to maintain quality control, once a product is in production.

For them, where just one single thing at a time can be identified and tested, it's a necessity. For you, though, trying to make a purchase decision between two products, the better practice is just to use your ears.

Only you have your own personal tastes and preferences; only you have your existing system that the new product must be compatible with; and only you have your own listening room, where it's going to have to perform.