My Questions and Answers (MQA)

An interview of and by Andreas Koch on the subject of digital audio formats

In the age of digital audio it appears that a new format is trying to conquer the consumer market every few years, but their success-to-failure ratio is much smaller than 1. It makes us wonder why it is tried again and again when the chance for success is so slim. Sadly, the last widely successful audio format was MP3 thirty years ago; "sadly" not because of the fact that it was a long time ago, but because of the significant quality loss and price erosion in music production it inflicted upon us.

Thirty years seem a very long time in our digital age, and wouldn't MP3 have enough problems and disadvantages to be easily displaced by a superior format in a matter of months? Apparently not, or else it could not have survived so long and still be so popular today.

What would it take to launch a new format today, and what problems could it possibly solve? The following article tries to answer this and other questions. Any resemblance between the suggested new format at the end of this article and any real format currently being introduced in the market would be purely coincidental.

Question: How much sustained bandwidth does a typical Internet connection have?

Answer: According to the "State of the Internet" published by Akamai Technologies in 2015, the global average bandwidth was 5.6Mbits per second, and that is average, not peak. In most technologically-developed countries, the average connection speed was around 10Mbits per second.

Question: How much bandwidth is required to stream stereo audio at various bit depths and sample rates?

Answer:

CD quality (16-bits/44.1kHz) needs about 1.4Mb/second

24-bits/88.2kHz needs about 4.2Mb/second

24-bits/96kHz needs about 4.6Mb/second

24-bits/176.4kHz needs 8.4Mb/second

24-bits/192 kHz needs 9.2Mb/second

DSD (Single DSD, or DSD64) needs 5.6Mb/second

Question: So can I stream audio at higher quality levels than compressed MP3 directly over the Internet?

Answer: As you can see from the above answers, there is enough Internet connection speed on a global basis for stereo audio at 24-bits and 96kHz PCM sample rate, or DSD at a Single DSD (DSD64) level—and that is without any compression at all, just pure unmolested digital audio as it may be used during recording. If your listening room is in the US, or most countries in Asia or Europe, there is enough bandwidth to stream twice as much.

Granted, in many cases your kids only want to surf the Internet when you are in your listening room streaming audio. And since you are a nice parent, you want to allow them that by limiting your own streaming bandwidth. In other words, there are many circumstances that don't always guarantee a certain set bandwidth. The limit may vary depending on total usage, and if you are getting close to that limit you may experience dropouts.

The point here is that no compression is needed to stream CD quality and higher resolution audio in most countries around the world. If the music is indeed recorded in a higher resolution than CD, then it can be streamed directly over the Internet to most listening rooms around the globe. Any bandwidth limitations that may still exist today in some places will surely widen up significantly tomorrow (that is, very soon).

Question: If that is so, are there streaming services offering better than CD quality streams?

Answer: Qobuz started offering streams in 24/96kHz in 2016. Already in 2015 Sony performed a test streaming pure DSD live from a concert in Japan. Anybody with Sony's free software and a compatible DAC could tune in and experience the concert in the same exact format as it was recorded concurrently.

Question: Is all the music offered in higher resolution actually recorded in that format, or has it been recorded in a lesser format, perhaps, and then up-converted?

Answer: The sad truth to this is, yes, a large percentage of music is being recorded in one format and then published or released in another. With the increasing demand for higher resolution music, a lot of existing (already recorded) music is being up-converted to satisfy that demand. This trend is mostly driven by two factors:

- Most customers equate high resolution or better quality to higher bit depth and higher sample rate. In other words, in their minds a recording at, let's say a sampling rate of 192kHz, automatically must be better than one at 44.1kHz, regardless of whether the original recording was at 192kHz or not. However, a high sample rate does not automatically constitute a high-resolution recording; it is rather a pre-requisite enabling the recording process (mic placement, editing, mixing, mastering etc) to take advantage of the wider bandwidth and fill it with musical art.

- As customers came to the idea in the early 90s that music should cost close to nothing (remember Napster), recording studios and music labels started to feel the budget crunch. Many of them are barely hanging on today, and are forced to cut corners where they can afford it. Therefore, many will take existing masters that were recorded in a lesser format and simply up-convert them electronically to better appeal their new high resolution aficionados. A large percentage, perhaps even the majority of all recorded music, exists in CD quality, and all too often "remastering" means to start with the 16-bit/44.1kHz existing master and re-release it in a higher sample rate.

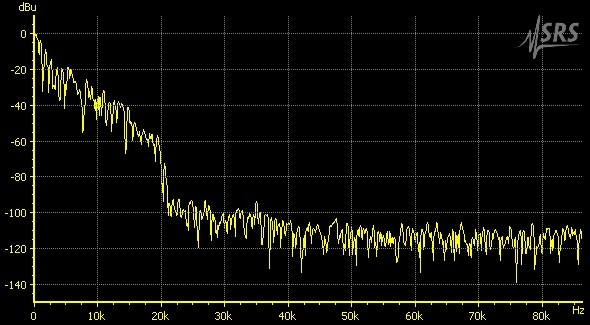

A few years ago I was asked by a mastering studio to run a spectrum analysis of an analog master tape that the studio was hired to digitize at 176.4kHz for a high resolution release. Below is the picture of my measurement:

You may wonder what that sudden and steep roll-off is at around 22kHz. In fact, this is a very typical phenomenon with every CD track. The inherent sample rate of 44.1kHz mandates a steep low-pass filter around half the sample rate (as noted by Nyquist), whereby lower frequencies are allowed to pass and higher frequencies need to be suppressed to avoid aliasing effects. In other words, the song on this so called "analog master tape" has originally been recorded and/or released on CD, then converted to analog, then put on tape, and now it is being converted back to digital for a "high resolution" release at 176.4kHz. In this case, the best sounding version of this song is not the new digital release, not the "analog master", but the original CD recording that obviously got lost over the years. Musical fidelity does not increase by moving away from the original recording (i.e. inserting more and more post processing steps and conversions), but by moving towards it (removing processing steps and conversions).

Question: Some streaming services are talking about offering "high sample rate" (not necessarily "high resolution") songs for a premium and in a new digital format. Are we about to see a new format war as we have seen so many times before with DAT, SACD, MP3, MiniDisc, DSD, etc., etc.?

Answer: The short answer is: quite possibly. And from these previous examples we know that the consumer will lose the most, and that eventually there will be a fallout whereby many of these formats will disappear.

For the long answer we need to understand that the first digital audio format, PCM, has been popularized by the CD in the form of 16 bits and 44.1kHz sample rate. No royalties need to be paid to anybody for using PCM, the format is computer friendly, well understood by micro and digital signal processors, and is straightforward to process in the studio. The only two major downsides are:

- The requirement for relatively steep anti-aliasing filters resulting in pre-ringing effects; and,

- its relative inefficiency at higher sample rates. PCM offers the same dynamic range from 0Hz up to the roll-off point of the anti-aliasing filter. For instance a PCM signal at 24-bit/176.4kHz will give you a theoretical dynamic range of 144dB at 30kHz. This is overkill, as our ears are not capable of perceiving more than just a few db at those higher frequencies, and only in short transient conditions.

It's easy to see why this became the standard for digital audio so fast. Only MP3 and other similar compression formats were able to compete with PCM on a global scale. And they could only do that by offering a clear advantage to the end user that PCM could not: Portability when digital audio gear was still too bulky to fit into your shirt pocket, and low bandwidth when the Internet was still very limited and storage expensive.

The point here is that a new digital format can only win and become popular against PCM when it can offer a clear and decisive advantage. Sound quality is not necessarily one of the popular advantages, or else MP3 could never have been as successful as it has been. So far, most formats in the past could only win with their offerings in convenience to the end user: CD vs. analog, MP3 vs. PCM, DVD vs. VHS. There were formats that competed on the basis of signal quality, but the winner was not always the one with the better signal quality (SACD vs. CD, Beta vs. VHS).

Question: What could possibly be the decisive advantage of a new format that streaming services would adopt it and change the world with it?

Answer: Of course the interest of these streaming services would be threefold:

- streaming bandwidth: from the above answers, we understand that today this is no longer an issue in most countries. If it still is in certain areas, it will very soon simply go away.

- storage: over the last few years, storage space became so inexpensive and available that this would no longer be decisive enough. Also, companies would hesitate encoding their digital libraries in a new format and then erasing all the originals in PCM. Most likely they would keep both formats for every song, therefore only increasing storage requirements.

- A claim for higher quality audio streaming would allow them to charge more for a better quality service option.

These points alone could hardly be strong enough reasons to invest in a format war against the all time winner so far: PCM.

Question: Does this mean that the world doesn't really need a new format right now, because the world doesn't have a big enough problem that a new format would be able to solve?

Answer: Exactly. If you see a new format emerge today, it is most likely motivated by a manufacturer's thirst for licensing revenue. If they market it cleverly, they could possibly count on some music label's support, because they always like to sell you their same existing recordings in yet a new format again.

Question: How then would a manufacturer or inventor of a new format have to market it to find a large number of adopters?

Answer: Generally, by combining the format with a feature or promise that the adopter or end user is really interested in. For instance, pick a streaming service catering to signal-quality-conscious customers, and promise a significant quality enhancement with the new format. A streaming service can reach a relatively large number of customers virtually overnight, and therefore provides an effective marketing tool. Furthermore, streaming services is only one stop for the format creator—only one degree of separation, with no physical inventory and the logistics thereof. Perfect. So let's create a new format on the basis of quality enhancement and have it be incompatible to PCM, so that everybody is forced to switch. No wait, that didn't work in the past. Let's make it compatible with PCM then, and at the same time allowing the streaming service to charge a premium for the "enhanced" service, and with every song encoded in the new format being playable on any standard PCM playback device. Win-win. The customer may get confused as to which is which, but who cares? It worked in the past.

Oh yes, the new format has to be proprietary and patentable so royalties can be collected. After all that is the entire reason to do it—selfish greed. As we have seen above, there is no other good reason, no customer benefit.

Question: Since we seem to enter a creative (or should I say "devilish"?) phase now in our interview, what else could we do in order to maximize the royalty stream?

Answer: How about a certification process whereby every hardware manufacturer is required to have the format creator certify every and each of its new DAC models and products? This can be achieved by designing the new format in such a way that it requires a decoding algorithm inside each DAC that needs to be customized for each DAC. This is a labor-intensive proposition, but we will make the DAC manufacturer pay for it, on top of the license for each unit produced. That is one thing that MP3 missed out on. The fact that MP3 is free to decode may have helped its success, but we will ignore this fact, because we are after maximum royalty streams before our scheme gets "unfolded," which it will eventually.

Question: Wow, now we see dollar signs. What technology or algorithm could we use? Quick, I feel we have to move now!

Answer: First we could use a clever compression algorithm that down-samples an original with a high sample rate to something like 16/44.1kHz; at least it would be compatible with PCM on that level. Some of the remaining bandwidth we have won through the lossy sample rate reduction, could be used to encode some of the higher sample rate information that got thrown out by the sample rate reduction. We could do it in a way so it is not so audible in the 16-bit/44.1kHz signal. That way the encoded signal is compatible to our old friend PCM and we can claim we are high resolution, because with the right equipment (certified and licensed by us) the decoded signal will be at a higher sample rate, therefore in high resolution. At least that will be what most people will believe.

Question: Wait a minute. Didn't you just say that high sample rate is not necessarily high resolution? Also, this encoding algorithm for the new format is a lossy compression and therefore will impact the sonic performance, certainly the temporal content. Actually, wouldn't it be worse than the original?

Answer: You are right. As the main audio band between 0-20kHz will be encoded in 44.1kHz and around 16 bits after our lossy sample rate down conversion, the main and most important data of our audio signal will be just CD quality, even after it is uncompressed again. Marketing would never be able to handle so many lies without our additional help.

So here is an idea how to help marketing with the quality lie: We add an apodizing algorithm that can counteract the negative effects that can happen in the analog-to-digital conversion and also in the digital-to-analog conversion. Many manufacturers already implement such filters in their DACs, and they actually work under certain circumstances. A claim for some limited sonic improvement wouldn't even be a complete lie. Since for this we would have to know some of the characteristics of each DAC in order to fine-tune our apodizing algorithm, it also helps us in explaining why we need a certification process for each product. In theory we would have to know the ADC and digital algorithms that were involved in the recording too, but that seems like a lot of trouble without a chance of payment. There are far less ADCs in the world than DACs, and most of them are owned by people with little money. With DACs it is quite the opposite, so we forget about the ADC and all the digital processing in the studio, and concentrate on the DAC as one of our cash cows. The side effects introduced during the recording process may actually be much more severe than the ones introduced by the DAC at home, because often the recording process requires multiple ADC and DAC conversions. But these are facts that we can easily ignore and hide.

Question: Let me recap this to make sure I understand the facts. This new format has the following features and characteristics:

- It reduces sample rate to 44.1kHz in its compressed form through standard sample rate conversion algorithms which are known to be as lossy as MP3 and others.

- It further reduces the audio signal from 24-bits to around 16 or 17, a process that further throws away vital information from the original signal that cannot be restored later. These remaining 8- or 7-bits will now be used to encode some of the high sample rate information that we threw out during the above sample rate down conversion. They can then be added to each 44.1kHz sample in the least significant bit positions. The above mentioned word length reduction from 24 bits down to 16- or 17-bits and the resulting higher noise floor will mask the effects of using these bits for the higher sample rate information. It is like making the signal quality slightly worse first so that the effect of the repurposed 7 or 8 least significant bits becomes less audible.

- The apodizing feature that we have added independently to the compression scheme has the potential to counteract some of the side effects that were introduced by the ADC and digital signal processing used during the recording process, as well as those that could be introduced by the DAC used during playback. But for this to work optimally we need to know the characteristics of the entire signal chain from studio to home.

Clearly no decisive advantage that could win us our format war; actually I can only see problems and disadvantages. How can we win this one? Seems like we could not win any honest victory, right?

Answer: That is where marketing has to help us. They are trained in creating a distortion field around reality by evading pointy questions and giving vague, imprecise, and inaccurate answers.

Here is how marketing could hide the facts in reference to your summary points above:

- We can say it is compatible to 44.1kHz, but never admit it is through sample rate conversion or some other lossy algorithm. Instead of compressing and uncompressing we will call it "wrapping" and "unwrapping" to give the impression that nothing ever gets lost or thrown away. Just like candy in its wrapper: sugar in, same sugar out. The important fact here though is that this sample rate conversion will always have a negative impact on the result, no matter what other process we introduce in addition. We always knew that the original unmolested source will always sound better than its sample-rate-converted version.

- We will point out that with careful dithering we can extend the resolution beyond the least significant bit, so beyond 16- or 17-bits total word length. This should offer enough diversion from the fact that we are throwing away 7- or 8-bits, not just 1 or 2. Customers won't know that dithering is not a substitute for lost bits.

- This will have to be our main point in the marketing campaign. We never admit any condition, or how limited this improvement really is, but instead carefully craft demo/comparison files where we can claim a "before" and "after" the encoding. It will be hard if not impossible if we do this too honestly, so we will have to be careful in selecting the "before" sample wisely in order to make sure it always sounds worse than the encoded "after" version. Under no circumstances do we want to allow reviewers or customers create their own encoded version from their own originals. That would be too revealing. We have to be in control of that encoding process and any comparison test.

Question: Coming back to our original goal to generate a hefty licensing revenue stream, where could we expect payments?

Answer: We get paid by the following:

- Encoding process for each song. We will have to enter strict agreements with music labels.

- Each song streamed by streaming services. We will have to enter strict agreements with each streaming service.

- Each product (DAC) that uses our decoding algorithm. We will have to implement a DRM (digital rights management) scheme that forces each equipment manufacturer to pay us a licensing fee for each and every product model sold. In addition, we will also implement a certification requirement that at the same time allows us to collect all the information about all the products that implement our algorithm. Who knows, that could become an important data base for us later on.

Question: Any caveats?

Answer: Most importantly, our scheme does not solve any problem that the world currently has right now. It is based on lossy algorithms and compressions that make assumptions about our auditory system. As history has shown with MP3 and others, the truth behind our claims about quality and high resolution of our new format will eventually come out. When that will happen is a fairly direct function of how well our marketing department will be able to hide the truth. In the meantime we just need to maximize our licensing revenue stream and then run.

Needless to say that there are superior and truly lossless compression algorithms available today with license free decoders and better sonic performance that could easily steal our show with clear advantages, but they don't have our clever and focused marketing campaign with the reality distortion field. Ultimately, a format war is decided in marketing, not engineering. We have made this experience all too often.

About the author: Andreas Koch has been involved in the creation of SACD from the beginning while working at Sony. He was leading a team of engineers designing the world's first multichannel DSD recorder and editor for professional recording (Sonoma workstation), world's first multi channel DSD converters (ADC and DAC), and participated in various standardization committees world-wide for SACD. Later he went on as a consultant to design a number of proprietary DSD processing algorithms for converting PCM to DSD and DSD to PCM, and other technologies for D/A conversion and clock jitter control in DACs. In 2008 he co-founded Playback Designs to bring to market his exceptional experience and know-how in DSD in the form of D/A converters and CD/SACD players. In 2015 he took over the entire company. Earlier he was part of an engineering team at Studer in Switzerland, designing one of the world's first digital tape recorders, then lead a team of engineers working on a multichannel hard disk recorder. He also spent three years in a stint at Dolby as the company's first digital design engineer.

All of this has given him a well-rounded foundation of audio know-how and experience. He can be reached via email at [email protected].